Executive Context

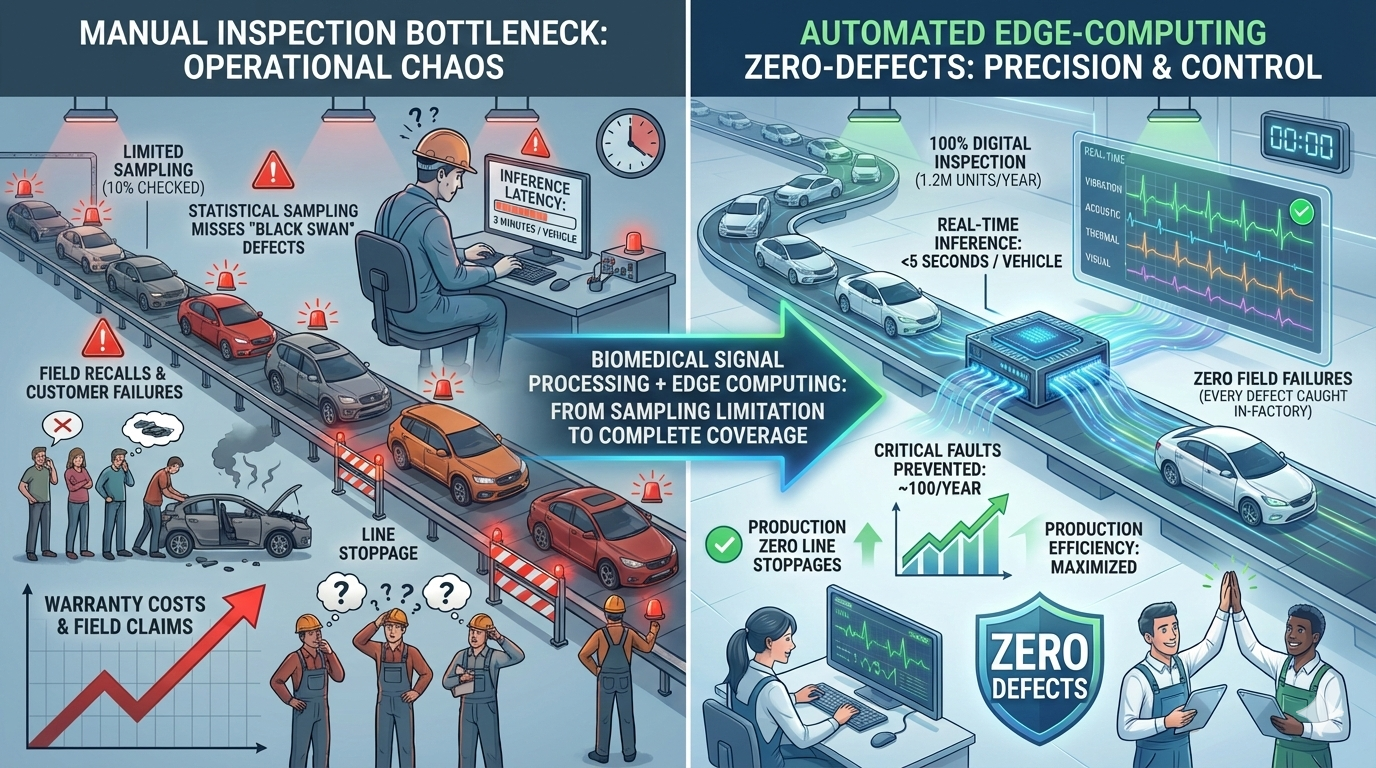

One of the world’s largest automotive plants (producing ~100,000 units/month) faced a critical quality control bottleneck. The production line cycle time was roughly 5 minutes per engine, with a hard cap of 30 seconds for end-of-line testing.

The operational constraint was compute latency. Existing ML models, designed by internal teams, required 3 minutes to infer a result—a physical impossibility on a moving assembly line. Consequently, the plant relied on statistical sampling, allowing rare “Black Swan” defects to escape into the field.

The Actual Problem

While the stated problem was “Automated Defect Detection,” the underlying engineering issue was Cross-Domain Blindness.

The client was attempting to use Computer Vision logic (Heavy Neural Nets) to solve a Signal Processing problem. They were treating the engine vibration data like an image, resulting in massive, slow models. Furthermore, with only ~20 historical defects per 4 million units, they faced a “Small Data” problem that standard Deep Learning could not solve.

Diagnostic Approach

We abandoned the standard “Industrial AI” playbook in favor of biomedical constraints.

- Data Sufficiency: We had 43 parameters of vibration data but only 20 “bad” samples. Supervised learning was mathematically impossible.

- Methodological Fit: We identified that an engine block behaves biologically like a human heart. It has a rhythm. A defect is simply an “arrhythmia.”

- Infrastructure Readiness: The solution had to run on “Near-ECU” edge hardware with <5 seconds of inference time.

Strategic Intervention

We imported algorithms from Cardiology to solve a Mechanical Engineering problem.

1. The “ECG” Algorithm (T-Digest)

We implemented T-Digest (a probabilistic data structure used in medical heart-rate monitoring) to create a “normal” signature for every 30-second test window. This allowed the system to flag any deviation as an anomaly without needing to be trained on specific defects.

2. Auto-ID Logic Gate

To handle sequential variance (e.g., Engine Type A followed by Engine Type B), we built a lightweight classifier that identified the engine variant from its “heartbeat” alone, automatically loading the correct parameter set in milliseconds.

3. Edge Deployment

By stripping away heavy neural networks in favor of efficient signal processing, we compressed the model to run locally on the line, eliminating cloud latency entirely.

Outcome & Attribution

The shift from “Big Data AI” to “Smart Signal Processing” removed the bottleneck.

| Metric | Pre-Intervention | Post-Intervention | Impact |

|---|---|---|---|

| Inference Time | 3 Minutes | <5 Seconds | Enabled 100% coverage |

| Inspection Scale | Statistical Sampling | 1.2 Million / Year | Every unit tested |

| Defect Capture | Reactive / Field Claims | ~100 Critical Faults/Yr | Prevented field failures |

| Line Stoppages | Frequent | Zero | Seamless integration |

Strategic Takeaway for Executives

The answer to your manufacturing problem is often found in a hospital, not a factory. By applying Biomedical Signal Processing to mechanical data, we solved a latency problem that standard “Big Data” approaches could not touch.

For enterprise leaders: Don’t let your data science team default to “Standard ML” when the physics of your problem demands a specialist approach.

Open for confidential dialogue regarding high-speed quality control and edge computing.