Executive Context

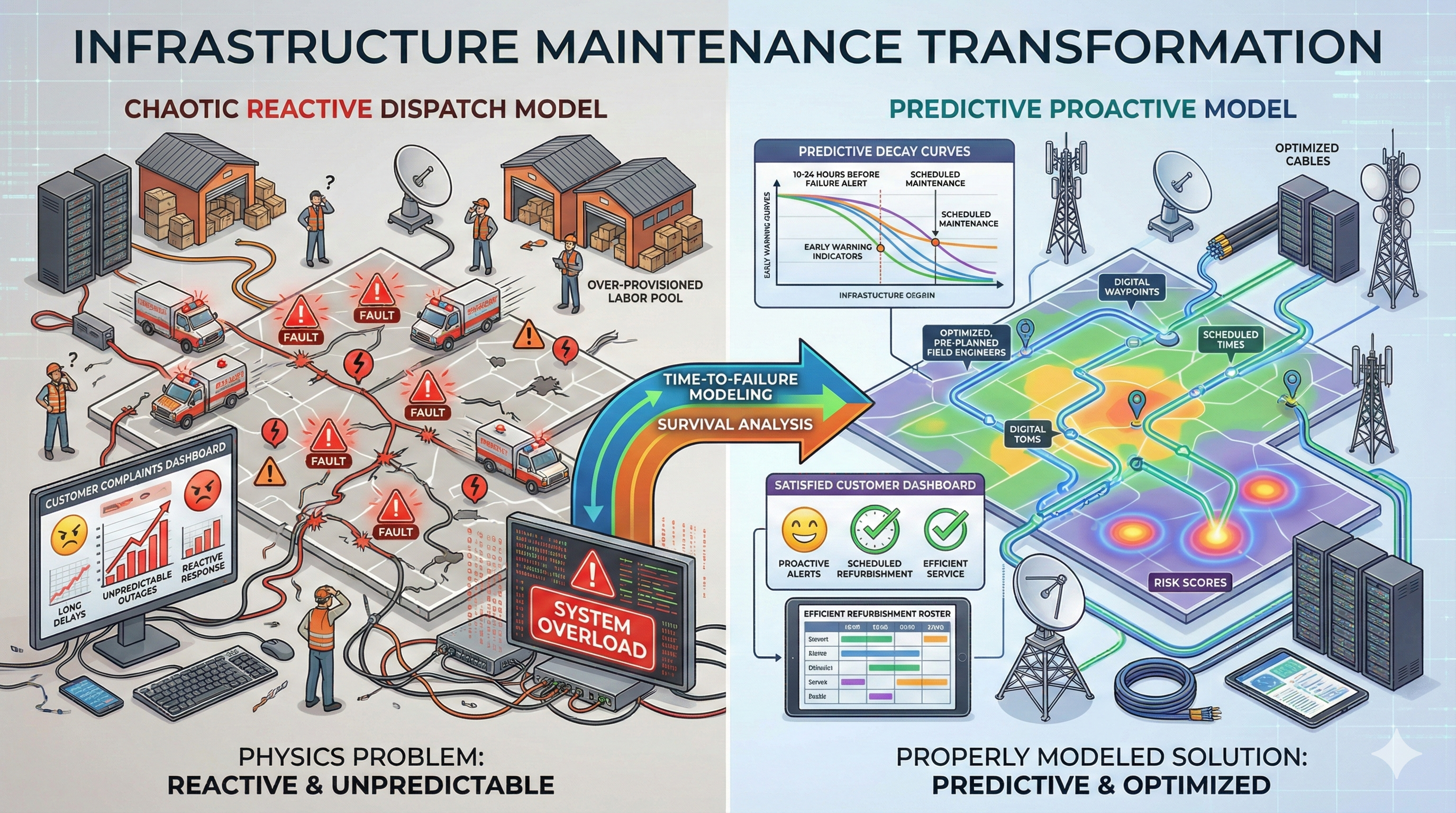

A leading European telecommunications provider faced escalating operational costs driven by reactive maintenance of its DSL infrastructure. The organization maintained a massive, capital-intensive pool of field engineers to address network faults that were infrequent but highly disruptive to the customer base.

The operational constraint was uncertainty: because the organization could not predict where the next fault would occur, they were forced to over-provision labor across the entire network. The business objective was to transition from a “break-fix” model to a predictive stance, identifying critical faults 8–16 hours in advance to optimize workforce allocation and preserve customer trust.

The Actual Problem

While the stated problem was “fault prediction,” the underlying economic issue was inefficient risk pricing.

The organization was attempting to solve a physics problem (entropy and decay) with a marketing tool (binary classification). Previous attempts failed because they treated infrastructure failure as a standard pattern recognition task. However, with a fault rate of roughly 1 in 2 million active lines, the “signal” was statistically invisible amidst the noise.

This misalignment meant the organization was burning capital on “false positives” rather than accurately pricing the risk of failure.

Diagnostic Approach

To unlock value, we moved beyond standard tool selection to evaluate the physics of the infrastructure failure.

- Data Sufficiency: Initial diagnostics revealed that simple signal data was insufficient—analogous to trying to predict a car crash by looking only at the speedometer. We expanded the aperture to include DSL periphery data and hardware heat sensor telemetry.

- Methodological Fit: We identified that standard classification models were failing due to the extreme class imbalance. The system required a shift from asking “Is this broken?” to asking “How fast is this decaying?”

- Infrastructure Readiness: The client operated under strict data sovereignty and on-premise constraints, ruling out cloud-native scalable solutions.

Strategic Intervention

The intervention focused on re-engineering the analytical approach and the operational workflow it supported.

1. Shift to Time-to-Failure (TTF) Modeling

We abandoned binary classification in favor of Survival Analysis and Decay Modeling. By mapping the gradual degradation of signal integrity and heat signatures, we calculated a probabilistic “time-to-failure” for every specific line. This allowed the system to flag high-probability failures 10–24 hours before service disruption.

2. Localized High-Frequency Scoring

Given the on-premise constraints, we engineered custom Spark clusters to execute localized, high-frequency scoring. This architecture allowed the organization to re-evaluate the risk profile of the entire network every hour without violating data residency protocols.

3. Operational Governance

We restructured the dispatch logic. Instead of reacting to customer tickets, the system generated preemptive alerts. This allowed the client to prioritize the “refurbishment roster” based on risk rather than geography.

Outcome & Attribution

The shift from reactive to probabilistic operations yielded immediate, measurable economic impact.

| Metric | Pre-Intervention | Post-Intervention | Impact |

|---|---|---|---|

| Annual Cost Savings | N/A | £11 Million | Attributable to labor optimization |

| CSAT Score | 4.6 / 10 | 8.7 / 10 | Shifted from “Apology” to “Advisory” |

| Fault Rate | 1 in 2,000,000 | 1 in 4,000,000 | Improved subnet health |

| Labor Efficiency | Double shifts common | 40% Reduction | Eliminated excess standby hours |

Strategic Takeaway for Executives

AI is often misapplied as a universal “fixer” rather than a specific instrument. In this case, success did not come from a more powerful algorithm, but from correctly defining the problem as one of decay rather than classification.

For enterprise leaders, the lesson is clear: The value of AI lies not in the tool itself, but in the precision with which it is mapped to the operational reality.

Open for confidential dialogue regarding predictive infrastructure and capital efficiency.